3 min read

Securing AI Training Data: How Bedrock Scans AWS EFS for Sensitive Information

Praveen Yarlagadda : Jun 11, 2025 1:28:52 PM

Securing your training data is essential, not just for compliance, but for model integrity and brand protection. If you’re storing AI training datasets in Amazon EFS, how do you ensure those volumes aren’t quietly harboring (and adding risk to the organization) PII, credentials, or other sensitive content?

Bedrock Security’s EFS scanning architecture is purpose-built for modern cloud-native environments. Unlike other approaches, it offers ephemeral, horizontally scalable, privacy preserving scanning that ensures data does not leave the customer’s environment. Here’s how it works, and why it’s different.

Why EFS Scanning Matters

AI training pipelines are increasingly dynamic and decentralized. Data scientists and ML engineers often mount EFS access points across multiple subnets for performance and scale, but this introduces risk, and brings up several questions:

-

Is there sensitive data buried in your feature stores?

-

Are files being reused from legacy workloads?

-

Who has access, and are those controls auditable?

Without contextual scanning and visibility, you’re flying blind.

How Bedrock Scans AWS EFS, and What Makes It Unique

1. Dedicated, Isolated VPC for Bedrock Scanners

Bedrock deploys scanners into a purpose-built, ephemeral VPC environment:

-

Private subnets host the scanning workloads

-

A NAT gateway securely handles outbound metadata transfer

VPC peering connects Bedrock to customer EFS-hosting VPCs

🔍 What’s Unique:

Most scanning tools require deployment within the customer’s VPC or demand heavy IAM privileges. Bedrock flips that model, isolating scanning infrastructure from your core environment, reducing blast radius, and simplifying compliance reviews.

2. Scoped Access Point-Based Scanning

Each scanner mounts a single EFS access point with precise IAM scope:

-

Metadata is read recursively and securely sent to Bedrock SaaS Service

-

No full file transfer; raw data never leaves the customer’s account

Bedrock SaaS Service performs AI-based classification and tagging

🔍 What’s Unique:

Access-point-level scanning allows fine-grained visibility without compromising data boundaries. Traditional scanners operate at the file system level, introducing broad access risk. Bedrock’s access-point isolation maintains a tight and verifiable security posture.

3. Massively Parallel, Horizontally Scalable Architecture

Need to scan 100 access points? Bedrock spins up 100+ ephemeral and cost-optimized serverless scanners:

-

Auto-scales based on available access points and SLA needs

-

Workloads complete independently with no infrastructure left behind

Works seamlessly across dev, staging, and production environments

🔍 What’s Unique:

This isn’t just scalable, it’s ephemeral. Bedrock scanners are serverless functions, not persistent EC2 instances or containers. That means zero ops overhead, no long-lived credentials, and a vastly smaller attack surface than alternatives.

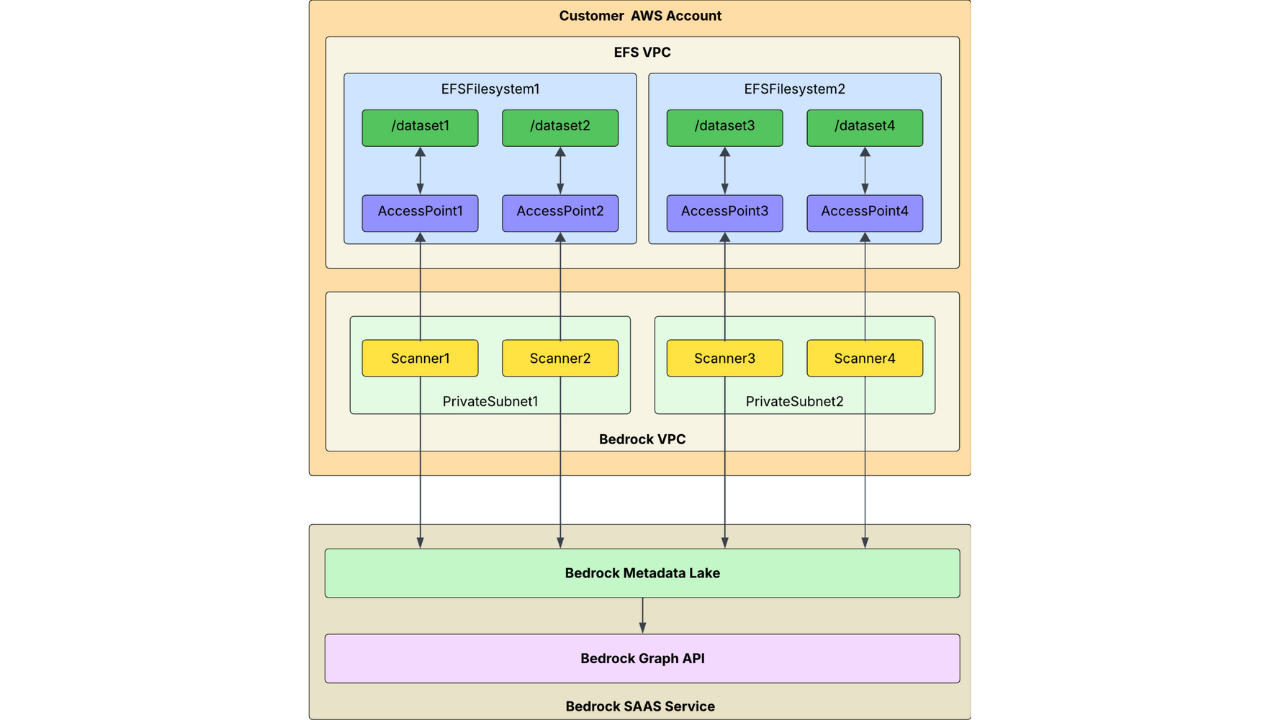

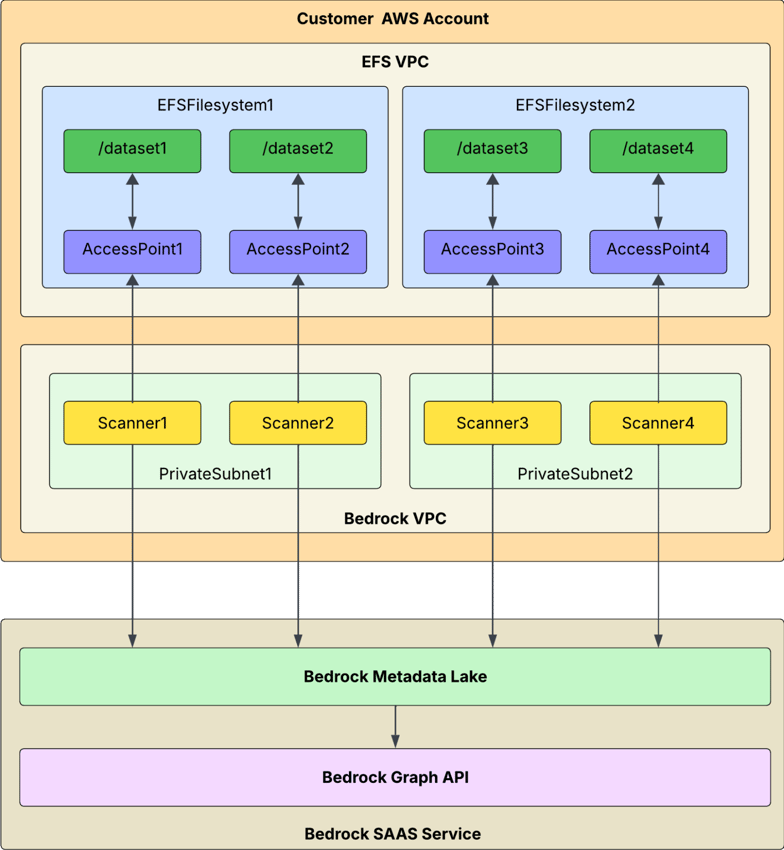

Typical Bedrock Deployment Architecture

Architecture Overview

The scanning workflow:

-

Bedrock Outpost deploys inside an isolated VPC

-

Each scanner mounts an EFS access point

-

File metadata is read and transmitted securely to Bedrock SaaS Service

-

Bedrock enriches, classifies, and maps sensitivity to risk, usage, and entitlements

The architecture integrates directly with Bedrock’s Metadata Lake, enabling cross-system correlation and automated tagging for future policy enforcement or lifecycle governance.

EFS Scanning Workflow

Why Not Just Scan Inside My VPC?

Some homegrown tools and open-source scripts do this, but they come at a cost:

-

You inherit all runtime and IAM complexity

-

There's no isolation between scanning logic and production systems

-

Managing secrets, logging, and lateral movement risks becomes your problem

Bedrock’s isolated approach removes that operational burden, without sacrificing control or visibility.

Why This is Different: Bedrock vs. Legacy Scanning Tools

|

Feature |

Bedrock |

Traditional DSPM |

DIY/VPC Scripts |

|

Infrastructure |

Isolated VPC with ephemeral functions |

Customer-managed agents |

Custom EC2/cron jobs |

|

Data Movement |

Metadata-only (no raw data) |

Often requires data export |

Depends on script scope |

|

Access Scope |

Scoped per-access point |

File system-wide or IAM role-wide |

Variable (often over-provisioned) |

|

Scale Model |

Auto-parallel with zero infra persistence |

Limited scanning due to agent infrastructure design |

Manual, brittle parallelism |

|

Security Posture |

Least privilege, no persistent infra |

Elevated, long-lived roles |

Risk of config drift |

Wrapping Up

Securing AI training pipelines is not just about compliance; it’s about trust. Bedrock’s EFS scanning capability offers a smarter, safer way to discover and manage sensitive data embedded in AI workloads.

If you're running multi-tenant training pipelines, staging AI data from internal systems, or inheriting legacy datasets, you need to know what's in those files. Bedrock lets you find out, at scale, without slowing anything down or risking a breach.

👉 Explore our platform or schedule a demo to see how EFS scanning fits into your broader data security strategy.

-1.png?width=588&height=164&name=type=Wordmark-01%2c%20Theme=Dark%20(1)-1.png)